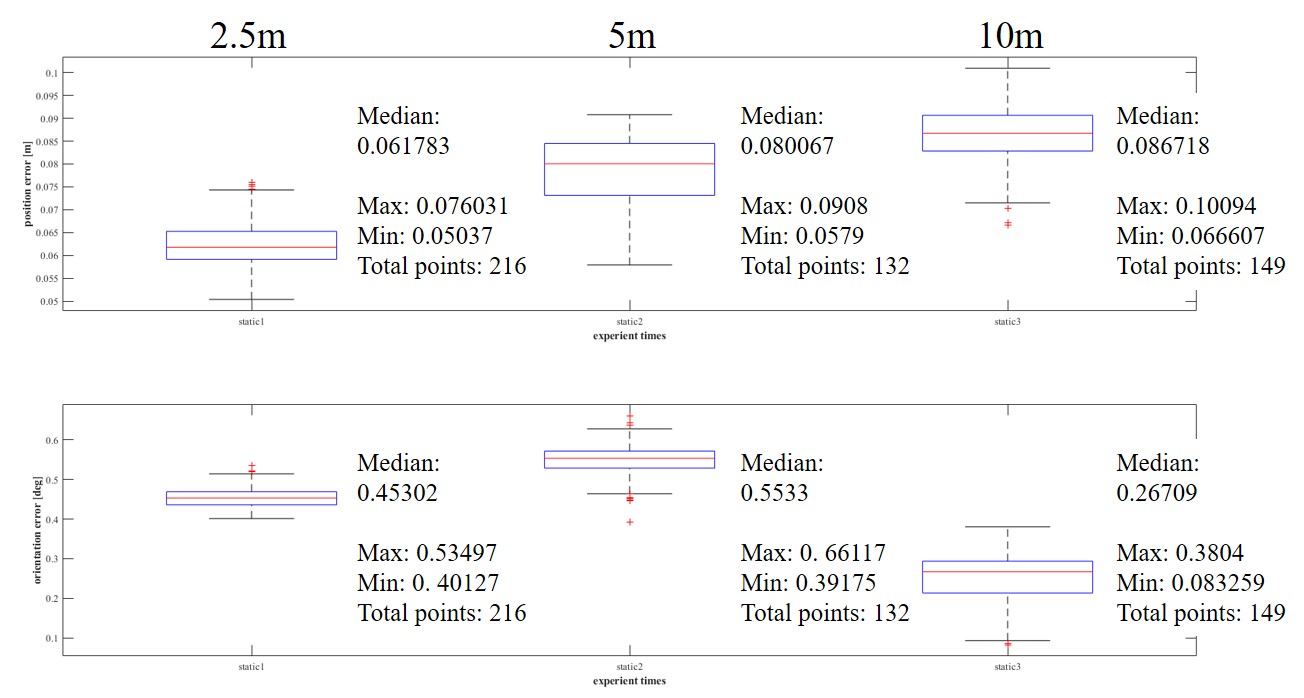

Static Errors

Two robots mutual localization in a static situation. The distance is 2.5 m, 5 m and 10 m. The median distance error is under 0.1 m. The median orientation error is under 0.4° .

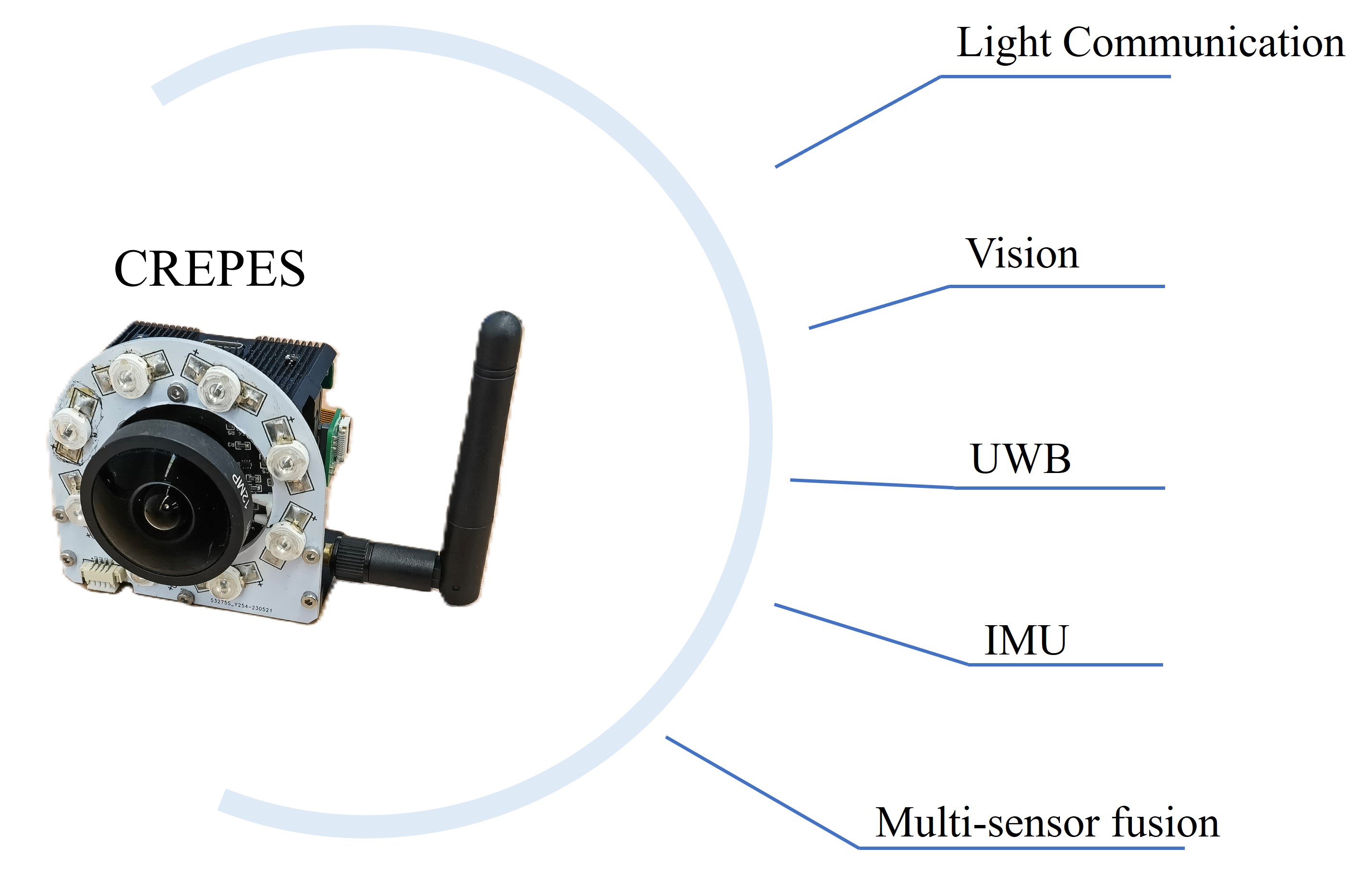

Mutual localization plays a crucial role in multi-robot cooperation. CREPES, a novel system that focuses on six degrees of freedom (DOF) relative pose estimation for multi-robot systems, is proposed in this paper.

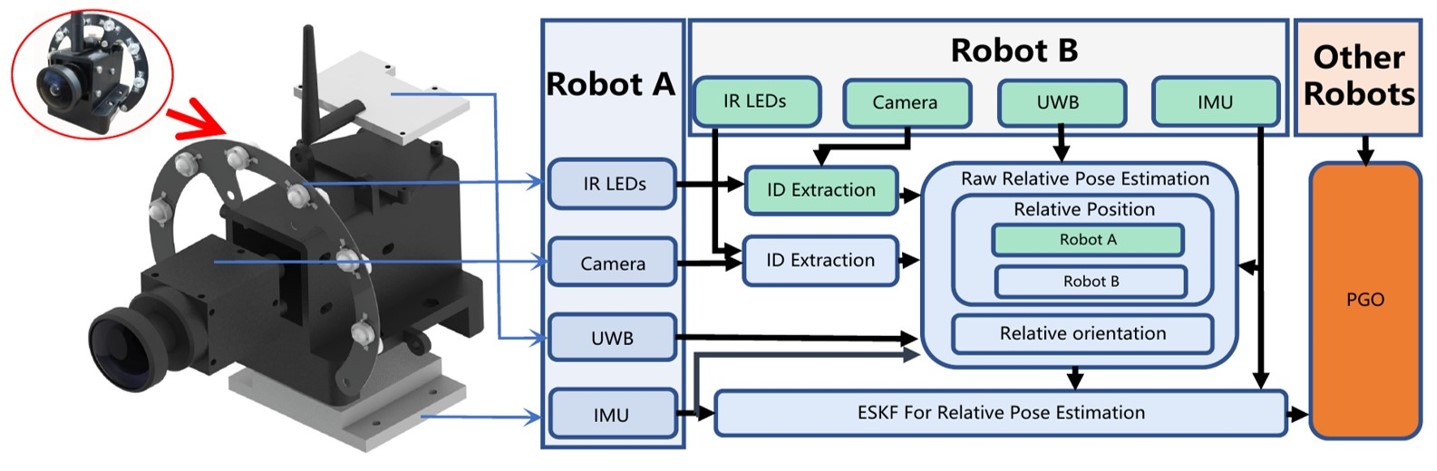

CREPES has a compact hardware design using active infrared (IR) LEDs, an IR fish-eye camera, an ultra-wideband (UWB) module and an inertial measurement unit (IMU). By leveraging IR light communication, the system solves data association between visual detection and UWB ranging. Ranging measurements from the UWB and directional information from the camera offer relative 3-DOF position estimation. Combining the mutual relative position with neighbors and the gravity constraints provided by IMUs, we can estimate the 6-DOF relative pose from a single frame of sensor measurements.

In addition, we design an estimator based on the error-state Kalman filter (ESKF) to enhance system accuracy and robustness. When multiple neighbors are available, a Pose Graph Optimization (PGO) algorithm is applied to further improve system accuracy. We conduct enormous experiments to demonstrate CREPES’ accuracy between robot pairs and a team of robots, as well as performance under challenging conditions.

The hardware consists of an IMU, an UWB, IR LEDs and a Camera. The Raw Relative Pose Estimation software module collects neighbors’ ID (from ID Extraction), directional measurement (from Relative Direction), distance (from UWB) and IMU information to get the raw estimation. Then an ESKF module filters the result using the IMU information. When multiple neighbors are around, a PGO module is employed to further improve the performance.

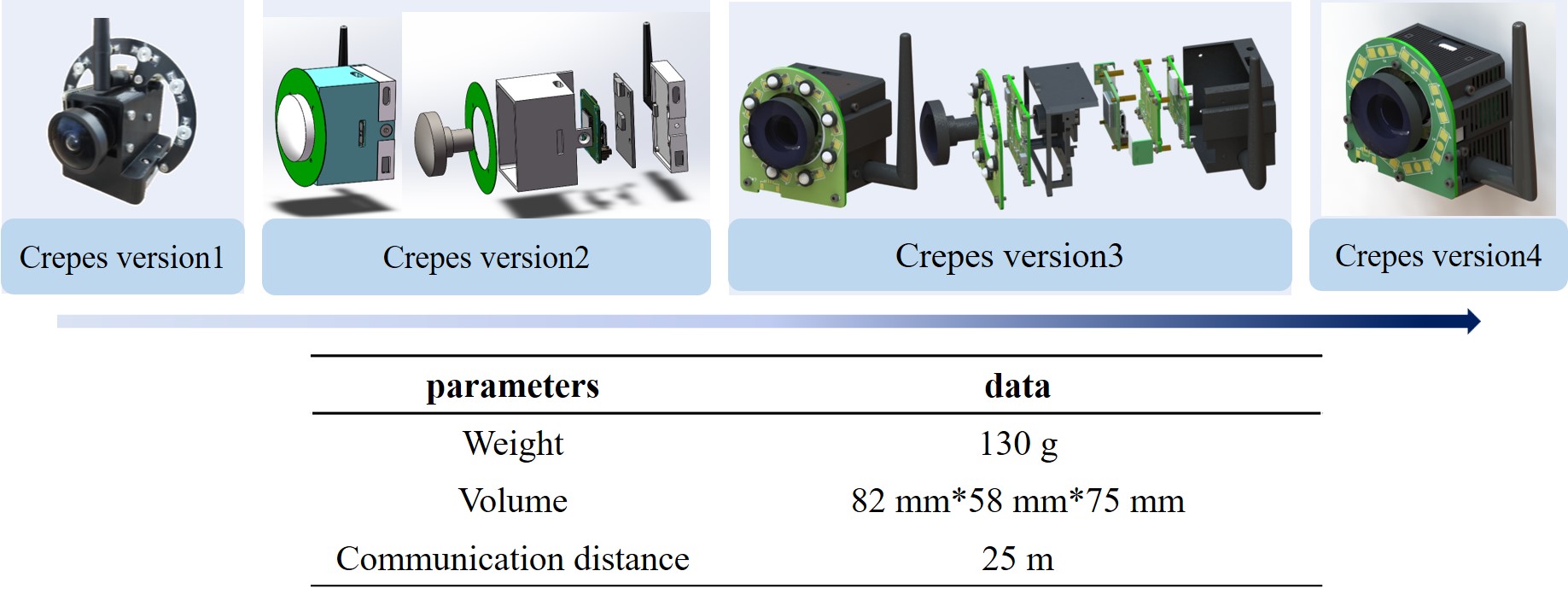

For more portable usage and less hardware output ports, the hardware of Crepes has been optimized for four iterations. From Crepes1 to Crepes4, the final weight, volume and communication distance are listed as following.

Two robots mutual localization in a static situation. The distance is 2.5 m, 5 m and 10 m. The median distance error is under 0.1 m. The median orientation error is under 0.4° .

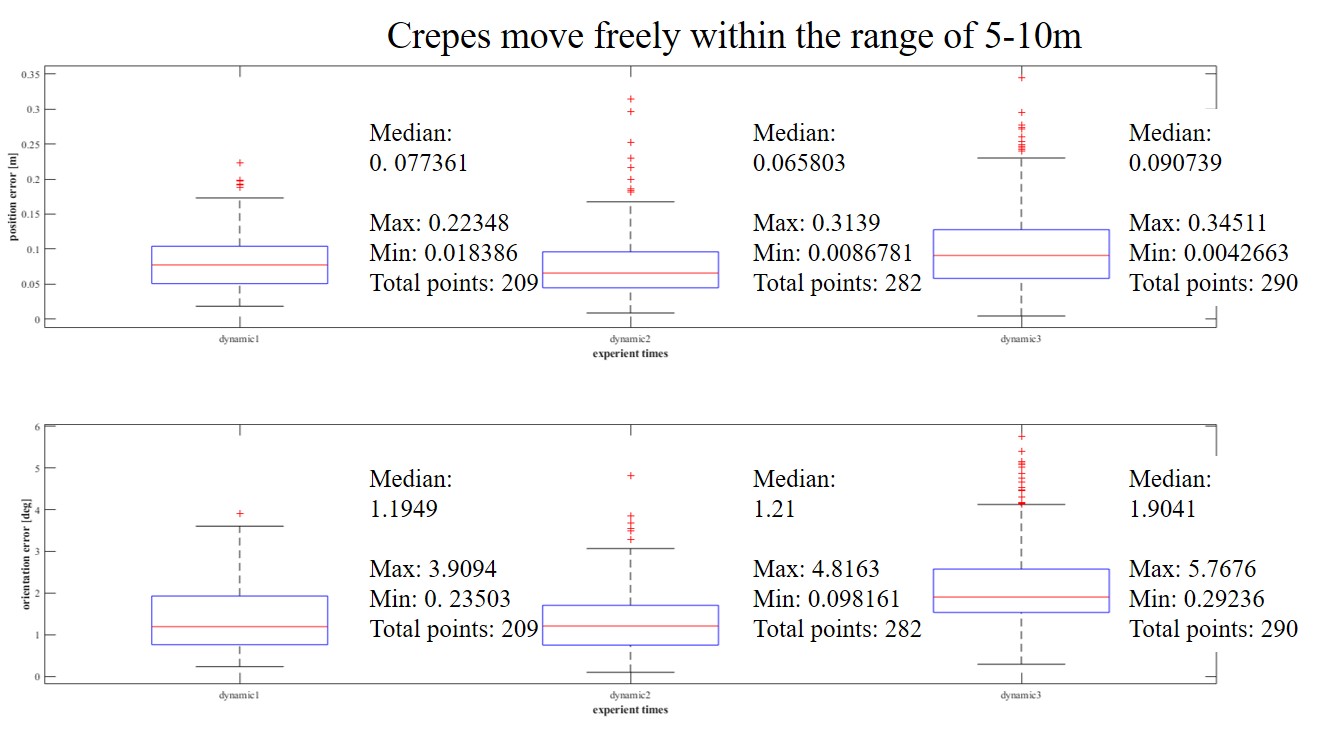

Two robots mutual localization in a dynamic situation. The distance is within the range of 5 m to 10 m. The median distance error is under 0.1 m. The median orientation error is under 2°.

Position estimating in occlusion scenarios is a big challenge. We conduct experiments with five robots in two scenarios with different levels of occlusion. The following figure shows the groundtruth position and the remapped relative position of five robots.

The UGV is controlled arbitrarily using Crepes and MPC controller ,and the UAV follows a circle trajectory.

Three drones are equipped with CREPES to obtain relative positioning and track around the vehicle.

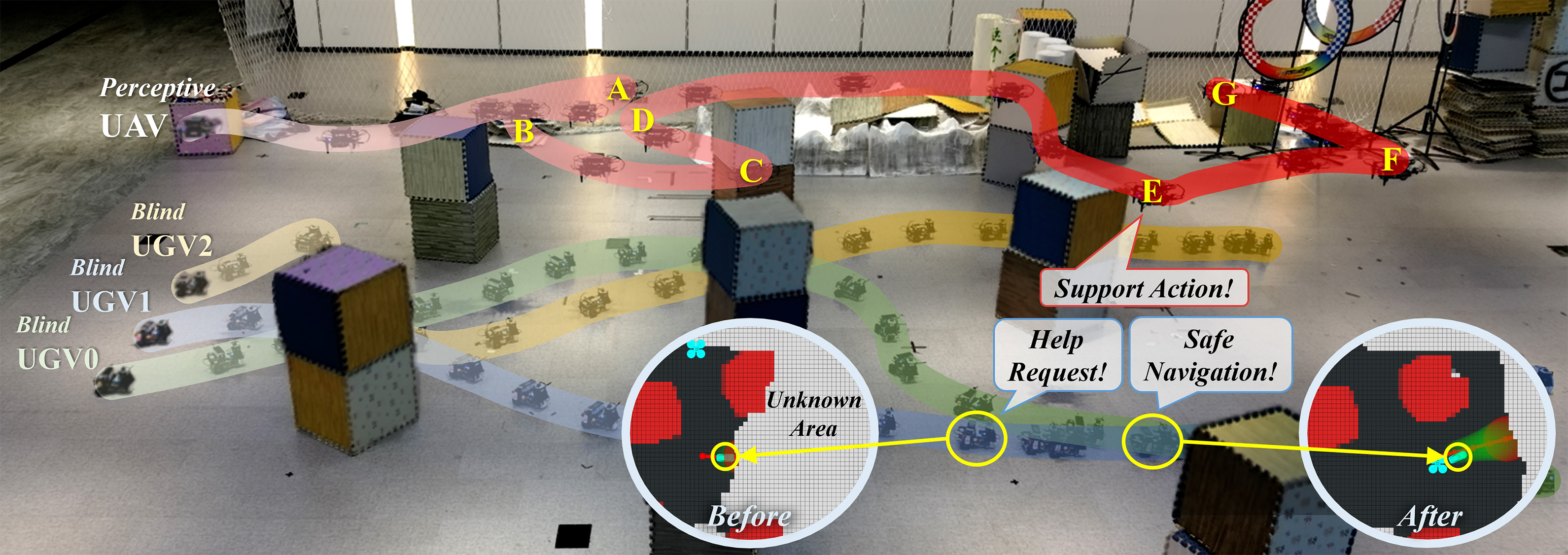

The drone is equipped with radar and CREPES devices, running the SLAM algorithm, serving as the leader of the system. The unmanned vehicle only carries the CREPES device to obtain relative position information and completes environmental exploration under the guidance of the drone.

@misc{xun2023crepes,

title={CREPES: Cooperative RElative Pose Estimation System},

author={Zhiren Xun and Jian Huang and Zhehan Li and Zhenjun Ying and Yingjian Wang and Chao Xu and Fei Gao and Yanjun Cao},

year={2023},

archivePrefix={IROS}

}